Following the growing role of artificial intelligence in the process of content creation, no doubt recognising texts written by human beings and language created by programmable devices has become more significant now than ever before. Whether in academia, journalism, or marketing, the devices used to ensure authenticity nowadays are AI detectors. However, what precisely do these tools do, and how trustworthy are they? This detailed guide will discuss the theory behind the detection of AI, its methods, the tools to perform the detection, and the issues that still exist in its reliability.

What are artificial intelligence (AI) detectors?

AI content detectors or AI writing detectors are computer programs that detect whether a text or other kind of content was authored by a human being or by an AI model like ChatGPT. They are very common in the fields of education, publication, journalism, and corporate content validation. Their primary purposes are to analyse text writings to find the patterns consistent with the text created by an AI, such as uniformity in sentences, minimal fluctuations, and predictable language.

The systems are deployed to use machine learning (ML) and natural language processing (NLP) to benchmark the submitted text against massive databases of human-written samples and AI-generated samples. Academic institutions, like. Universities utilise such detectors to ensure academic integrity by consulting on the possibility of originality of essays and dissertations. In the same measure, journal editors use such tools to check that the language used in the submissions has not been copied into the generative AI tools.

How Do AI Detectors Work?

The activity of AI detectors consists of a layered analysis within which the combination of linguistic, statistical, and machine learning methods is used. As an overview of how they usually work, this is what they typically do:

1. Tokenization and preprocessing

AI Detector splits the text received as input into small pieces of data called tokens. They could be sub-word fragments or words. This enables the system to study the frequency and context effectively. The text is also normalised, that is, lowercased, de-punctuated, and any extra spaces are removed to limit variability.

2. Feature analysis

The software does feature analysis of the text after tokenization, where different aspects of the text are examined.

-

Perplexity: The degree to which a word is predictable in context. Smaller perplexity implies that the text is very predictable, which is a characteristic feature of AI-written text.

-

Burstiness: This evaluates sentence length differences and structure. Burstiness is characteristic of human writing because of people's inherent variability in thought and utterance.

-

N-gram Patterns: Tests a repeating sequence of words. Since large datasets are used in training AI text, a similar pattern results in repetition.

-

Vocabulary Complexity: Compares the word usage and structure of sentences with any familiar pattern of human and AI writing.

3. Embedding and semantic analysis

The AI detector may also turn the text into numerical vectors (embeddings) that represent the semantics. These vectors may then be compared to databases of known AI and human texts to assess stylistic similarities using the system.

4. Classification machine learning

The guts of the majority of detectors are labelled as a data-trained classifier. The system gets trained on the samples of both human and AI-created data to identify patterns and make predictions. These models include simple statistical classifiers to complex models of deeper learning.

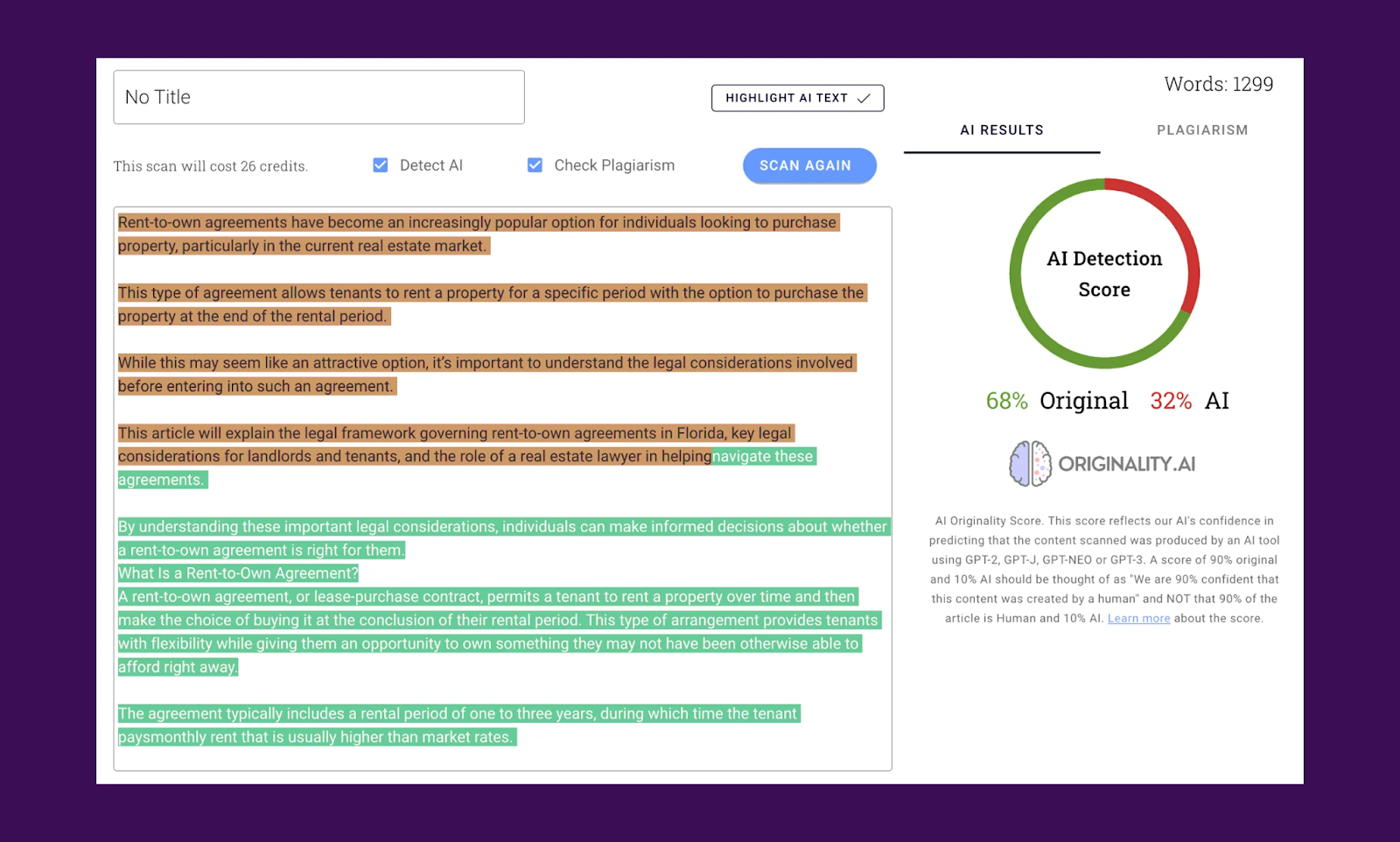

5. Probability scoring

Once that is done, a score is produced to show the probability that AI would have written the material. The scores are usually in the form of percentages (e.g., score of 87% AI generation) or degrees of confidence (e.g., likely human).

6. Final determination and Cross-referencing

Other tools perform comparisons of text against registries of known outputs, or more semantically-sensitive checks as well. The final complete production can be marked in questionable parts or phrases as well to assist the human reviewers.

Limitations of AI detectors

While AI detection tools are increasingly advanced, they are far from perfect. Here are some key limitations:

False positives

Detectors sometimes mislabel human-written content, particularly academic or formal writing, as AI-generated. Non-native English speakers are especially vulnerable to this issue due to their structured sentence style.

False negatives

Advanced AI models like GPT-4 can produce content that is nearly indistinguishable from human writing. In such cases, detectors might not flag AI-generated content at all.

Model adaptation

As AI models continue to evolve rapidly, detectors must constantly be updated. An outdated detector may perform poorly against the latest AI systems.

Lack of context

AI detectors analyse text in isolation. They don’t understand the intent, context, or nuances that a human might notice. This can lead to misclassification.

Easy bypass methods

Simple tactics like adding spelling mistakes, changing sentence structure, or using synonyms can fool AI detectors.

AI detectors vs. plagiarism checkers

Though similar in function, AI detectors and plagiarism checkers serve different purposes:

|

Feature |

AI detectors |

Plagiarism Checkers |

|

Purpose |

Identify AI vs. human authorship |

Identify copied or unoriginal content |

|

Method |

Use ML to detect patterns of AI writing |

Use databases to match identical or paraphrased content |

|

Limitations |

Struggle with advanced AI or subtle human edits |

Cannot detect AI-generated but original phrasing |

Using both tools together can offer a more complete picture of content authenticity.

Are AI detectors reliable?

The insights provided by AI detectors are useful, but they cannot be 100 per cent accurate. Studies show their accuracy to range between 70 and 90 per cent. Tools such as Originality.ai, GPTZero, and Copyleaks are considered to be more sound than others, not to mention that they can be combined.

Nevertheless, even the best tools can make mistakes, or creative or non-standard writers can see mistakes. Detectors frequently discriminate against non-native writers and formal tones.

Due to this, it is essential to employ AI detectors as consultative mechanisms, rather than putting their power at their disposal. Human review must be used.

Popular AI detection tools

-

Originality.ai: Used by professionals, known for high GPT-4 detection rates.

-

GPTZero: Popular in education, highlights AI-written sentences using burstiness and perplexity.

-

ZeroGPT: Free tool with probability scoring; suitable for light use.

-

Copyleaks AI Detector: Combines AI detection with plagiarism checking; supports multiple languages.

-

Turnitin AI Writing Detection: Integrated in academic settings; sometimes criticised for false positives.

-

Grammarly AI Content Detector: Useful for professionals and business users.

-

Writer.com Detector: Business-oriented tool; gives clear verdicts like "likely AI" or "likely human.

Final thoughts

Using AI detectors is ethically acceptable, especially in academic and professional environments. However, it's vital to:

-

Admit that they are limited.

-

Be careful not to narrow in on one tool

-

Manually cross-check the result when necessary

-

They should be used to enhance quality, and not to castigate blindly

In a bid to assure content authenticity, AI detectors are playing a much more crucial part. On the one hand, they do not make mistakes even though they employ complex methods such as machine learning, perplexity, and stylometry. False negatives and positives are inevitable, and human judgment takes central stage. To yield the best possible results, detectors and manual review are used.

The tools and standards that we apply in evaluating AI need to change as they do. Knowledge of the functioning of AI detectors enables creators, educators, and editors of various content to use it comprehensively, thus making the content credible and original in the era of artificial intelligence.